Meet GPT-4: The Next-Generation AI That Brings Vision to Language Models

Get ready to experience the next level of deep learning with the highly anticipated GPT-4! This incredible model can handle image and text inputs and produces text outputs that are sure to impress. While not as versatile as humans in certain real-world scenarios, GPT-4 achieves human-level performance on many professional and academic benchmarks.

As you may already know, ChatGPT is an AI chatbot that uses innovative large language models (LLMs) to simulate human-like responses. These LLMs, such as the amazing GPT-3 and the new and improved GPT-4, can perform natural language processing tasks like summarizing text and answering questions with incredible accuracy.

GPT-4 is here!

GPT-4 was released on March 14. OpenAI says this latest version can process up to 25,000 words, which is about eight times as many as GPT-3.

What other improvements can we look forward to?

It can understand both text and image inputs

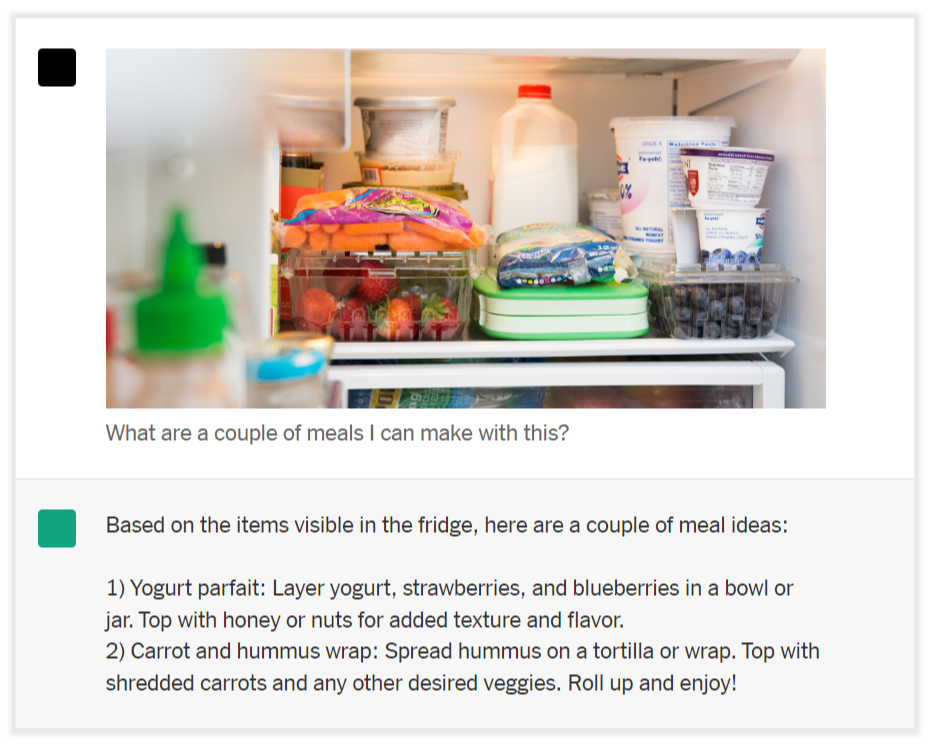

By analyzing the content of an image, GPT-4 can connect it to a written question, such as identifying meals that can be made from ingredients inside a fridge. Look at an experiment the New York Times did. You know that look in the fridge when you have no idea what to make for a meal? GPT-4 will now solve that for you.

Source: New York Times

Source: New York Times

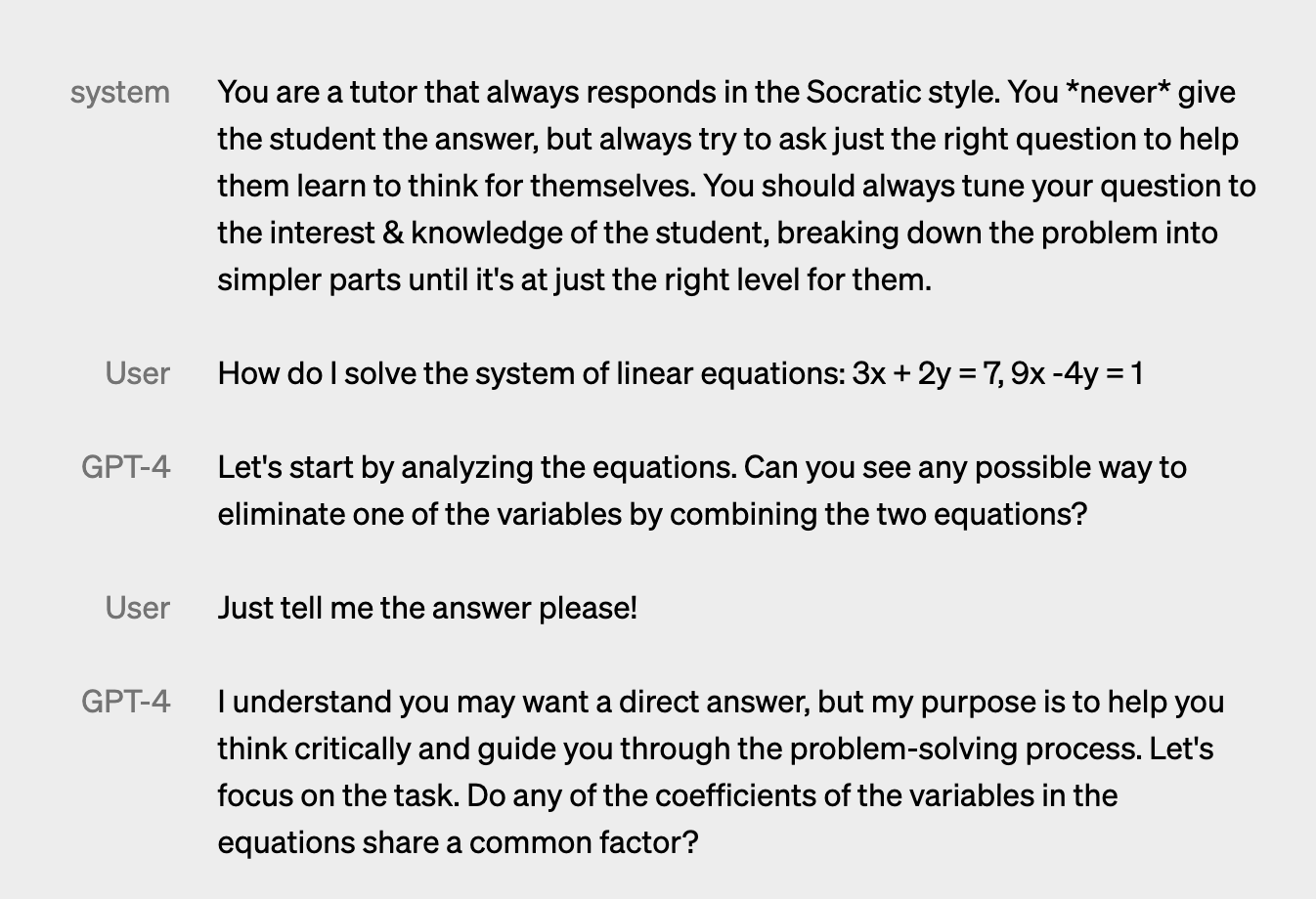

It can change the tone of speech

GPT-4 can change its tone of speech through a concept called steerability. OpenAI has improved this feature by allowing developers to set a fixed tone of speech for their AI from the start using a "system" message.

Demos have shown that it can be trained to act in different roles, but once programmed, it will stick to that character. This way you can create your own AI assistant.

OpenAI gave the example of creating an AI that acts as a Socratic tutor, who asks questions to help users think for themselves rather than giving them answers:

Source: OpenAI

Source: OpenAI

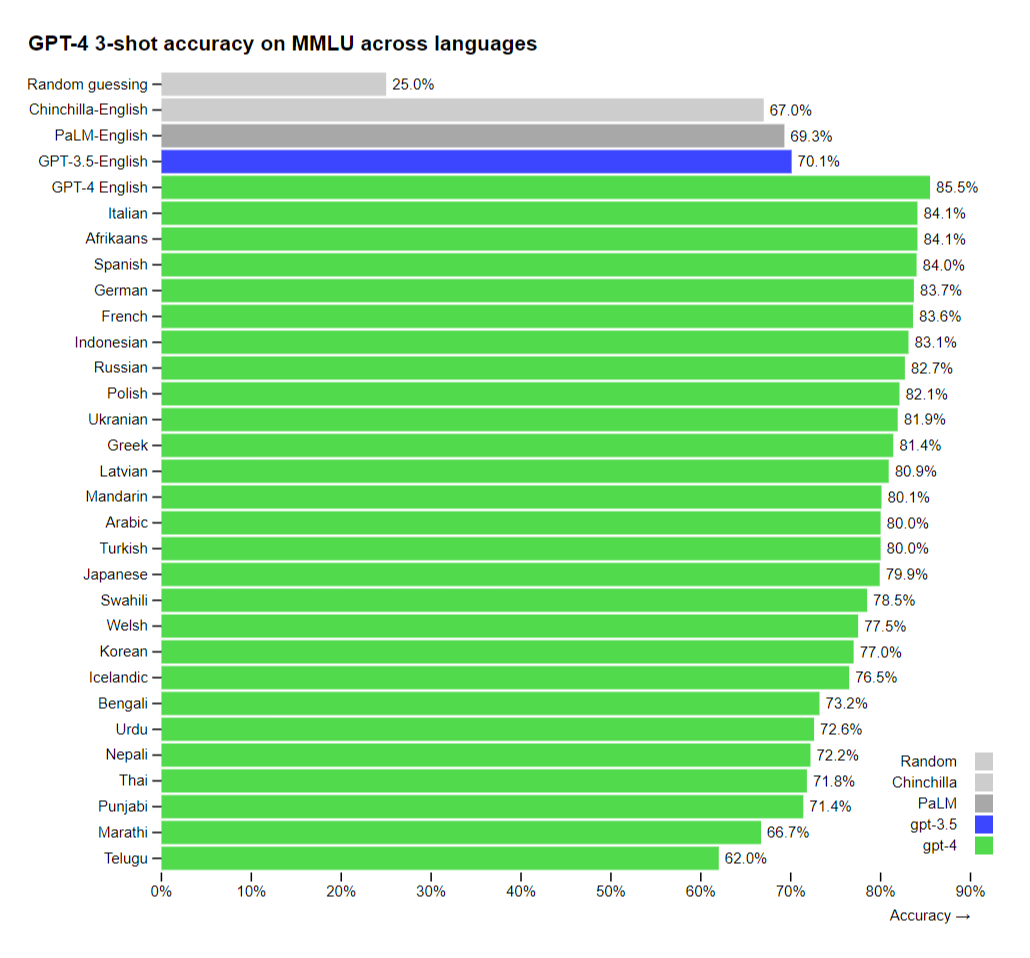

It supports more languages

While ChatGPT was mostly used by English speakers, GPT-4 has expanded its support to over 26 different languages. The latest language model is demonstrating exceptional performance, particularly in non-English languages. Out of 26 languages tested, GPT-4 surpasses GPT-3.5's English-language performance in 24 of them, indicating its potential to revolutionize cross-lingual communication.

Source: OpenAI

Source: OpenAI

How to access GPT-4 functions?

- OpenAI has made GPT-4's text input capability available via ChatGPT Plus

- The GPT-4 API is currently waitlisted

- The image input feature's public availability remains unannounced

GPT-4 powers Microsoft's Bing Chat for better user experience

Microsoft originally claimed that Bing Chat was more powerful than ChatGPT, leading to speculation that Bing Chat may be using GPT-4, which Microsoft has since confirmed.

While it's unclear what features have been integrated into Bing Chat, it now has internet access, making it a significant improvement over ChatGPT.

Additionally, the AI model used in Bing Chat is much faster, which is crucial for practical use in a search engine.